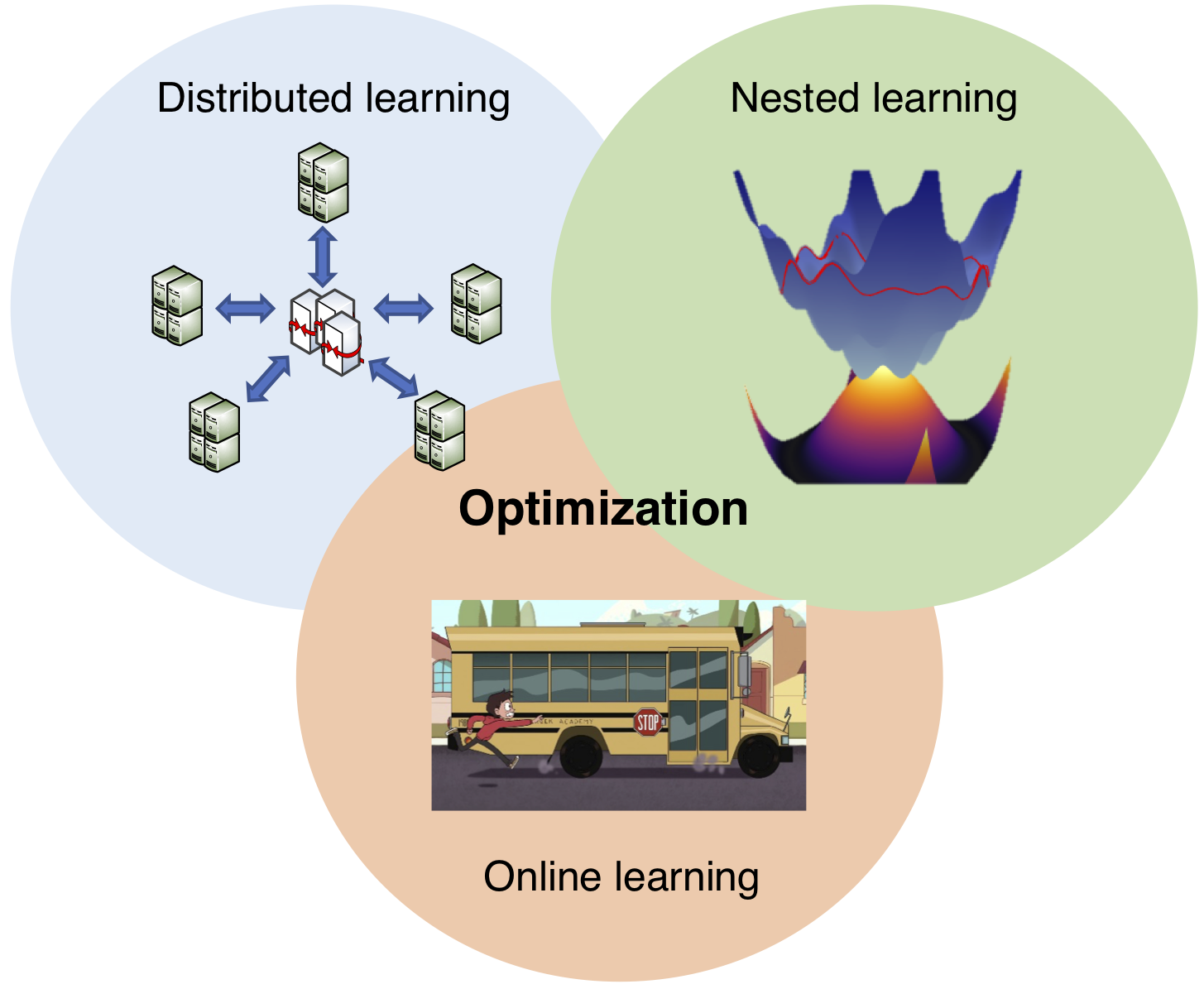

The past decade has witnessed successful applications of artificial intelligence (AI) and machine learning (ML) in almost every branch of science and engineering. The driving force of this success is that optimization is becoming the enabling factor of modern AI and ML applications. However, the efficiency of these optimization methods remains far from full satisfaction to meet the evergrowing demand that arises in new AI/ML applications in many real-world settings.

Bilevel optimization for learning with nested structures

Many ML problems today, such as meta learning, hyper-parameter optimization, and reinforcement learning, go beyond the above simple minimization structure, and have hierarchically coupled structures (termed the nested learning thereafter). As a result, computing (stochastic) gradients, i.e., the basic elements in first-order optimization machinery, becomes prohibitively expensive and even impossible in some situations. In this context, we develop new computational methods to address this issue. Our paper that includes the initial efforts in this direction was recognized as the ICASSP Outstanding Student Paper Award.

Related publications:

- T. Chen, Y. Sun and W. Yin, ‘‘Solving stochastic compositional Optimization is nearly as easy as solving stochastic optimization,’’ IEEE Transactions on Signal Processing (TSP), vol. 69, pp. 4937 - 4948, June 2021.

- T. Chen, Y. Sun, and W. Yin, ‘‘Tighter analysis of alternating stochastic gradient method for stochastic nested problems,’’ Proc. of Neural Information Processing Systems (NeurIPS), virtual, December 6-14, 2021. (Spotlight talk)

- T. Chen, Y. Sun, and W. Yin, ‘‘A single-timescale method for stochastic bilevel optimization,’’ arXiv preprint: 2102.04671, February 2021.

Distributed optimization in resource-limited regimes

State-of-the-art deep neural network models and contemporary ML algorithms tend to be resource-intensive, often requiring a large amount of data and significant computing power. The efficiency of optimization methods remains far from full satisfaction to meet the evergrowing demand of new AI/ML applications in resource-limited regimes. Resource intensity is a more severe issue when running ML applications in a distributed manner at wirelessly connected devices. In this context, we develop new distributed optimization methods to address this issue.

See more background and detials in distributed machine learning.

Related publications:

- T. Chen, G. B. Giannakis, T. Sun and W. Yin, ‘‘LAG: Lazily Aggregated Gradient for Communication-Efficient Distributed Learning,’’ Proc. of Neural Information Processing Systems (NeurIPS), Montreal, Canada, December 3-8, 2018. (Spotlight talk and poster)

- J. Sun, T. Chen, G. B. Giannakis, and Z. Yang, ‘‘Communication-Efficient Distributed Learning via Lazily Aggregated Quantized Gradients,’’ Proc. of Neural Information Processing Systems (NeurIPS), Vancouver, Canada, December 3-8, 2019.

- T. Chen, Y. Sun, and W. Yin, ‘‘Communication-Adaptive Stochastic Gradients for Distributed Learning,’’ IEEE Transactions on Signal Processing (TSP), vol. 69, pp. 4637 - 4651, July 2021.

Online optimization with complex environments

Online convex optimization, belonging to the class of time-varying optimization, is an emerging methodology for sequential inference with well documented merits. However, early works in this area rarely consider complex environments, which prevents one from implementing them in challenging application domains. These complex environments include settings where i) constraints can possibly be satisfied in the long term rather than a per-round basis; ii) online possibly bandit feedbacks incur unknown delay that prevents one from updating variables in a timely manner; and, iii) the optimization variable is a nonlinear function rather than a finite-dimensional vector. My research in this direction focuses on generalizing existing online optimization approaches to those challenging environments.

Related publications:

- T. Chen, Q. Ling and G. B. Giannakis, ‘‘An Online Convex Optimization Approach to Proactive Network Resource Allocation,’’ IEEE Transactions on Signal Processing (TSP), vol. 65, no. 24, pp. 6350-6364, Dec. 2017.

- B. Li, T. Chen, and G. B. Giannakis, ‘‘Bandit Online Learning with Unknown Delays,'' Proc. of Intl. Conf. on Artificial Intell. and Stat. (AISTATS), Naha, Japan, April 2019.

- Y. Shen, T. Chen, and G. B. Giannakis, ‘‘Random feature-based online multi-kernel learning in environments with unknown dynamics'' Journal of Machine Learning Research (JMLR), vol. 20, no. 22, pp. 1-36, February 2019. Also accepted in part to AISTATS 2018.