ECSE 4500/6500 Optimization and Learning in Distributed Systems

|

Course Information

Meeting Times: Mon Thu: 10:10 AM - 11:30 AM

Webex link: https://rensselaer.webex.com/meet/chent18

Instructor: Tianyi Chen; Office Hours: Mondays at 5 pm – 6 pm

Teaching Assistant: Xuefei Li; Office Hours: Wednesdays at 5 pm – 7 pm

Course Description

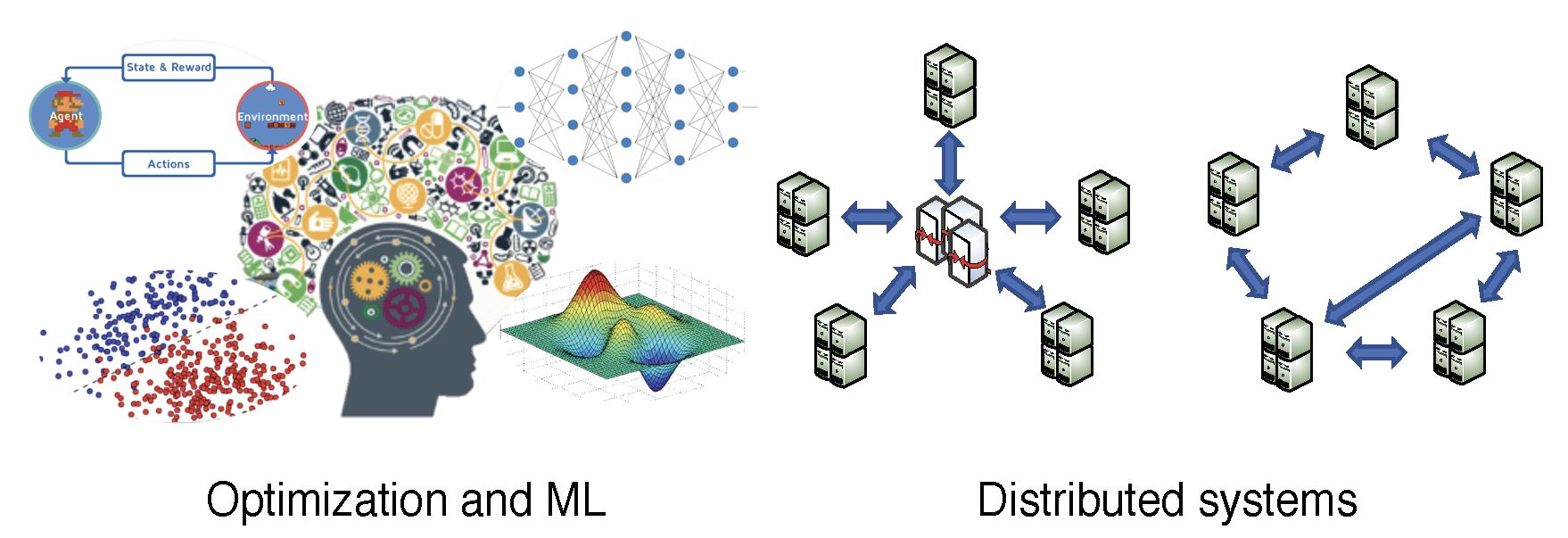

This course is neither about hardware implementation nor design of distributed systems. This is a course about algorithms and simulations of parallel and distributed optimization. The course covers parallel and distributed optimization algorithms and their analyses that are suitable for large-scale and distributed problems arising in machine learning and signal processing.

Prerequisites

This course is intended for graduate students and qualified undergraduate students with a strong mathematical and programming background. Undergraduate level coursework in linear algebra, calculus, probability, and statistics is suggested. A background in programming (e.g., Python and Matlab) is also necessary for the problem sets. A background in optimization and machine learning is also preferred. At RPI, the required courses are MATH 2010 and ESCE 2500, and the suggested courses are MATP4820 and ECSE 4840, or permission by instructor.

Student Learning Outcomes

After taking the course, undergraduate students are expected to know:

-

1. how to formulate a distributed optimization problem from real-world learning, estimation, and control problems in distributed systems;

2. how to implement numerically stable algorithms to solve real-world engineering problems in distributed systems;

3. how to qualitatively evaluate the efficiency of a distributed algorithm for various applications in distributed systems.

In addition to the above, graduate students are also expected to know:

- 1. how to estimate the per-iteration complexity, namely, counting the number of arithmetic operations of a distributed optimization algorithm;

2. how to design and modify numerically stable algorithms to solve real-world engineering problems for various applications in distributed systems.

Grading Criteria

Homework assignments: total 6, 60%

Course project: Proposal 5% + Presentation 10% + Report 25%

- ECSE 4500 – Group project (1-2 students). It can be either theoretic or experimental, with approval from the instructor. You are encouraged to combine your current research with your term project.

ECSE 6500 - Individual project. It can be either theoretic or experimental, with approval from the instructor. You are encouraged to combine your current research with your term project.

Optional References

1. Dimitri P. Bertsekas and John N. Tsitsiklis, "Parallel and Distributed Computation: Numerical Methods", Athena Scientific, 2015;

2. Stephen Boyd, Neal Parikh, Eric Chu, Borja Peleato and Jonathan Eckstein, “Distributed Optimization and Statistical Learning via the Alternating Direction Method of Multipliers,” Foundation and Trends Machine Learning, 2011;

3. Guanghui Lan, “Lectures on Optimization Methods for Machine Learning,” preprint, 2019;

4. Ernest Ryu and Wotao Yin, “A First Course in Large-Scale Optimization,” preprint, 2020.

Course Content

1. Optimization basics and complexity measures

2. Basic machine learning models

3. Parallel and distributed architectures

4. Synchronization issues in parallel and distributed algorithms

5. Communication aspects of parallel and distributed systems

6. Synchronous distributed algorithms

- a) Distributed gradient descent

b) Distributed/local stochastic gradient descent

c) Distributed variance reduced stochastic gradient

- a) Decentralized gradient descent

b) Decentralized ADMM

c) Decentralized stochastic gradient descent

9. Decentralized algorithms with time-varying topology

10. Applications in machine learning, signal processing and control

- a) Federated learning

b) Distributed reinforcement learning

c) Distributed power system state estimation

d) Distributed parameter estimation in sensor networks