Research

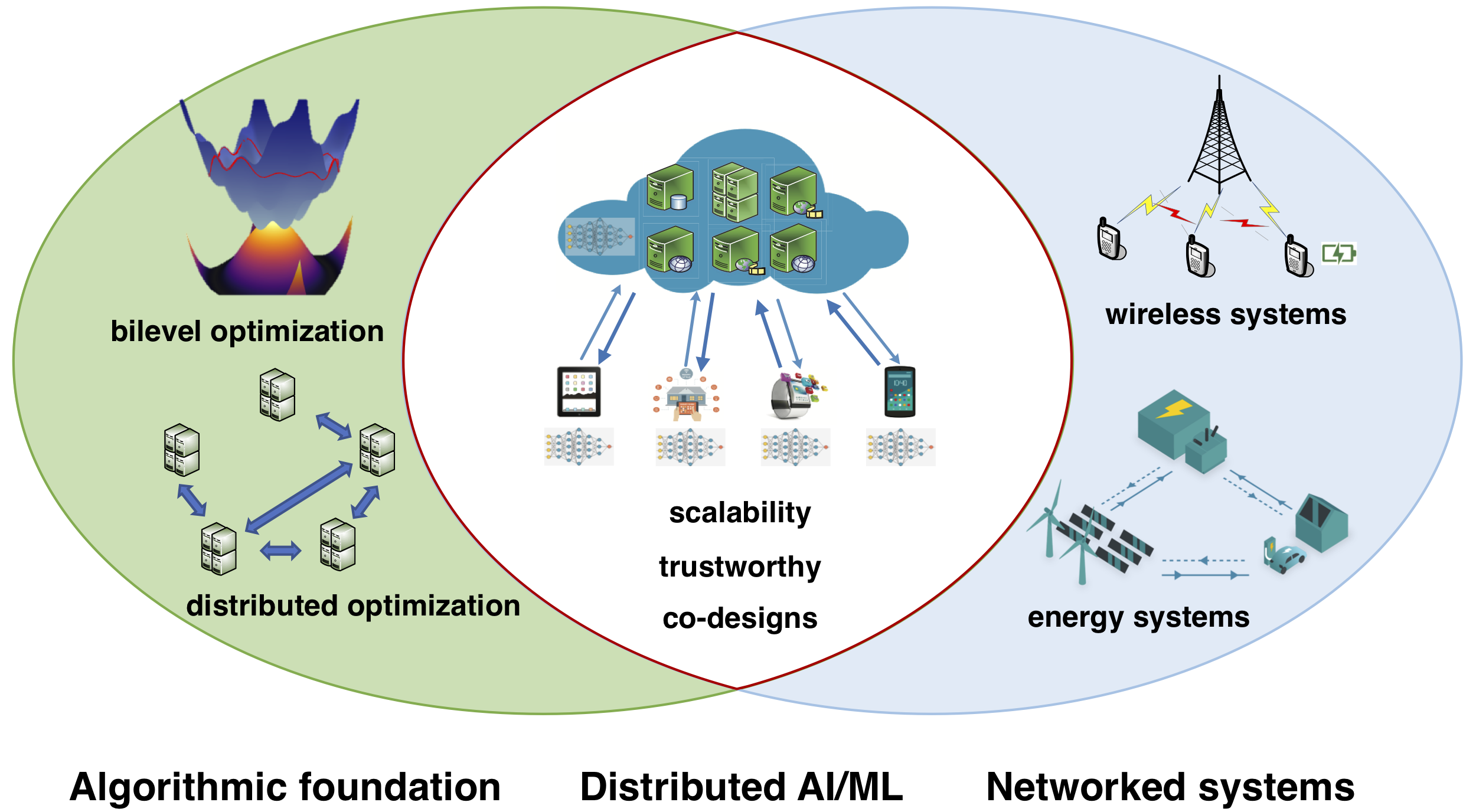

I have a statistical signal processing background, and I am now working at the intersection of optimization, machine learning, and networked systems. My current research focuses on both i) the theoretical and algorithmic foundations of optimization and machine learning, which are driven by challenges arising in specific application domains; and, ii) the applications of optimization and machine learning advances to address the key challenges of future networked systems such as wireless and energy systems. The overarching objective of my research is to wed state-of-the-art optimization, learning and signal processing tools with the emerging networked systems, in a way that they can inspire and reinforce the development of each other.

My recent research can be categorized into:

-

Optimization foundation of machine learning [more]:

Optimization is becoming the enabling factor of modern artificial intelligence (AI) and machine learning (ML) applications. However, the efficiency of these optimization methods remains far from full satisfaction to meet the evergrowing demand in many real-world AI/ML applications. In this context, I have been working on optimization methods for AI/ML problems that go beyond the common empirical risk minimization structure, which include bilevel optimization, distributed optimization and time-varying optimization. -

Distributed machine learning at networked systems [more]:

Today, given the growing sensing and computing power of smart devices, amplified by increasing concerns on data privacy, it is expected that a sizeable amount of AI/ML tasks will be collaboratively run at the devices in wireless networks, e.g., in federated learning. In this context, my research efforts are centered on two fundamental issues of distributed (reinforcement) learning algorithms: scalability and trustworthiness, which include topics such as communication-efficient and Byzantine-resilient learning. -

Learning for optimizing autonomous systems [more]:

Optimally allocating communication and network resources is a crucial task in autonomous systems. In the past, most resource allocation schemes are based on a pure optimization viewpoint, which incur slow convergence. My research takes a fresh statistical learning and/or bandit learning perspective on this problem, and enables resource allocation in challenging autonomous systems.

Research Support

I gratefully acknowledge support from National Science Foundation (Awards 2047177, 2134168), IBM AI Horizons Network, Amazon Research Awards and Cisco Research Gifts.